Drawn to Life premiered on November 18th and is one the latest Cirque du Soleil’s stage production. Created as a permanent show for Disney Springs in Orlando, this live production combines the magic of hand-drawn animations, circus acrobatics and high end video effects.

This production’s creators’ mandate was to celebrate the art of animation and make various tools associated with the craft such as brushes and sheets come to life. In order to achieve their goals and create moments of pure magic through video scenography, the creators relied on PHOTON and VYV’s skilled software development team.

Since some of the key features packaged in the recently released version 10 of PHOTON were used profusely to support Drawn to Life, we thought to present them within the context of the production. While we know the lab environment is perfect for invention and software development, the real, definitive, testing ground for technology is met through deployment on a production. See how we achieved to breathe life into inanimate objects and create magic on stage.

Multi-view calibration

This new calibration method relies on a multi-view geometry computation to perform an accurate 3D reconstruction of a scene. In laymen’s terms, this means that PHOTON now uses cutting-edge algorithms that optimize calibration when using multiple cameras.

We now consider multi-view to be our most accurate, precise and robust calibration method. Surprisingly, it also proves to be one the easiest to manage once the show operation has begun. As an example of the optimization potential of this technique, our team was able to scale down the need for passive optical markers during calibration. Instead of the initially estimated 120 optical markers fitted on 30 cable slings, only 26 markers mounted on 13 lightweight stands were needed to calibrate Drawn to Life’s stage area.

Compositing blending

Usually, media server compositing operations occur in texture space—layers of 2D content are stacked upon each other and sent as a final texture to rest on a 3D surface representing a screen. We thought another approach was lacking in our toolkit: by feeding mapped 3D content back to the texture space we could offer additional flexibility in the rendering pipeline and allow users to apply post-process image treatment to the rendered content.

Drawn to Life’s set features 7 seven layers of motorized curtains that can move in and out of the stage to create a backdrop onto which is projected a video scenery. The curtains’ movements end up changing the depth of the set. In order to compensate for this situation, which can be a nightmare when using convergent projectors, the programmers used PHOTON’s new compositing blending feature. By feeding back the calibrated 3D content as textures, it was possible to blend through successive rendered scenes organized as layers along the z-axis. Using a simple fader on a controller, the operator was able to compensate the geometry changes caused by the moving curtains in real time.

Vertex tracking

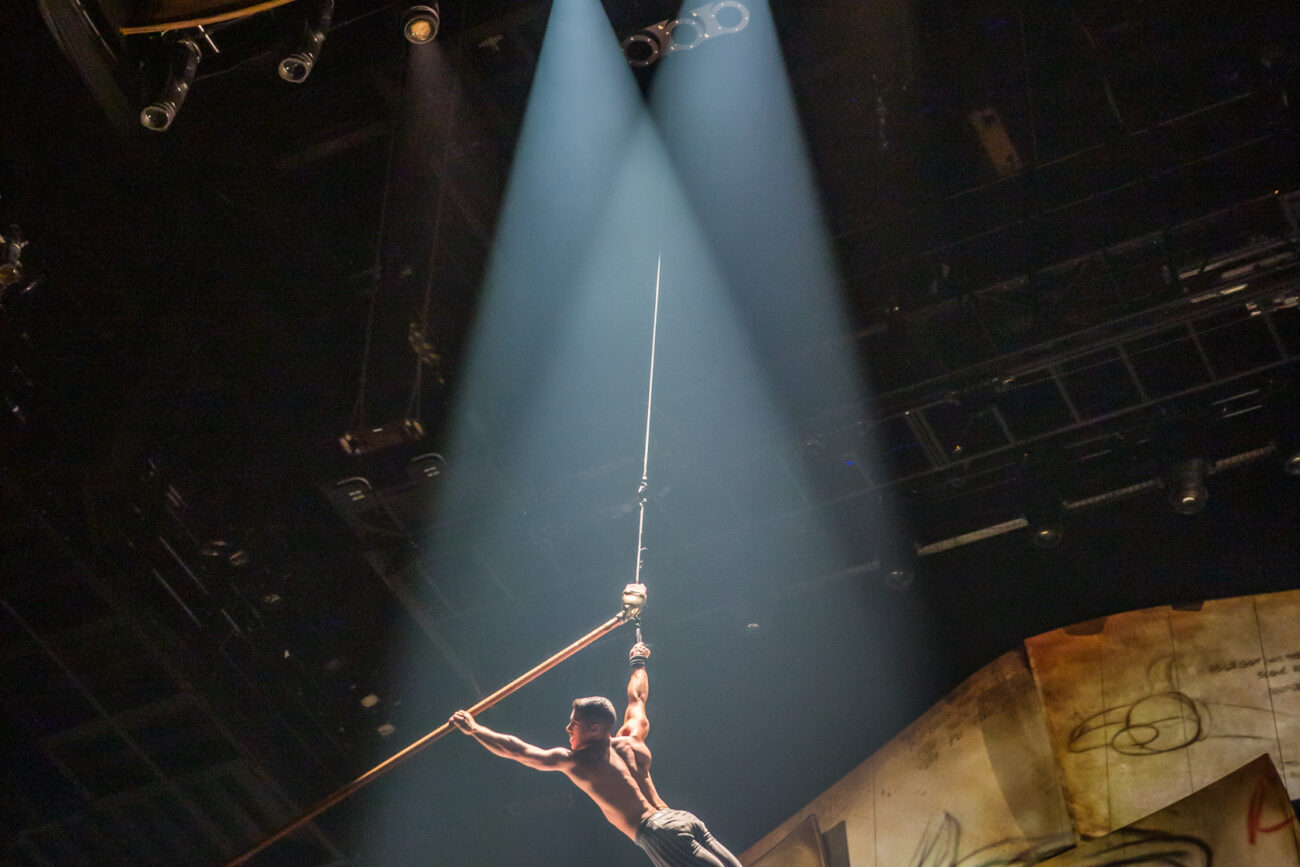

Realtime projector alignment for moving screens is one of PHOTON’s strong. But, what if the screen’s shape changes over time? Of course, we did have to compensate for minor structural torsions on some productions but that was nothing like what was planned for Drawn to Life.

One of the acts of the production relied on 5 large animation sheets that were controlled by puppeteers. The sheets would not only move on the stage but also twist and bend. Tracking morphological transformations is something we dreamed of for a long time until we came with a solution: by inserting multiple optical markers in the screen structure and tying these to virtual vertices (points along the screen surface) we used a physical model simulation to guess with a high degree of fidelity the ongoing transformation of the material. Morphological tracking is now show-ready and part of PHOTON’s bag of tricks

Tracker monitoring

Optical tracking systems provide precise information that will help achieve tasks such as projector calibration, real time alignment and interactive effects. However, they do generate a vast amount of data which complicates the technician and programmer’s work. Trying to find a defective or noisy optical marker on the stage of a large production is like finding a needle in a haystack.

Featuring no less than 17 tracked props and costumes, Drawn to Life is one of these live productions that could prove problematic. Now thanks to ALBION’s monitoring feature, it was possible for our team to follow in real time a precise reconstruction of the moving props and identify or even anticipate issues related to tracking.

Full sACN implementation

The integration of sACN communication in PHOTON is the definitive step towards our goal of providing a seamless integration with lighting equipment. Even though we offered support for Art-Net, we soon felt limitations with this prior lighting protocol integration. We lacked access to critical data provided by modern lighting fixtures and consoles.

Now, PHOTON’s sACN integration provides an accurate level of detail about lighting fixtures and allows interactive control over data coming a lighting desk. Fully transparent for any lighting desk programmer, our system now supports features such as automated dimmer fading which proved more than useful on Drawn to Life.

Event-driven programming and musical interactions

VYV takes pride in offering a versatile technological solution. Since we have an extensive background in designing systems for circus shows, we know that a locked, fully automated linear cue execution system is impractical; artists need some elbow room to perform at their peak. For magic to occur during a show, time needs to dilate and contract. Following the lead set by onstage performers is often the best option when trying to pace media interventions on a live production.

Fortunately, PHOTON features a flexible UDP messaging system that simplifies interactions between the media server and performers. Drawn to Life did not use timecode to control the flow of events that would constitute the core of the show. Instead, the band’s keyboardist would send UDP messages to the cue system and direct the progression of the show. Putting the performers at the heart of the technical system of a show is one of the core reasons for which VYV was founded.

Nodal programming for interactive effects

Breathing life into the animator’s tool was one of the production’s key creative direction. Multiple props such as an oversized brush and a pen were integrated into circus numbers and had to create splashes of paint and drawn lines while being manipulated by performers.

Using optical markers integrated into the props, the creative team was able to create the illusion of a live painting show, thanks to PHOTON’s FX Graph. This nodal programming system is designed to support custom interactive effects creation and proved to be more than necessary to Drawn to life’s creative team.

Writer and Show Director:

Michel Laprise

Production Director:

Matthew Whelan

Projection Designers:

Mathieu St-Arnaud, Félix Fradet-Faguy

VYV PHOTON Specialists:

Nicolas Dupont, Gabrielle Martineau, Laurent Schmitz